In AI-driven disaster response, you face ethical dilemmas like balancing urgent aid with protecting privacy, avoiding biased algorithms, and ensuring accountability for decisions made by machines. These systems can misuse sensitive data or overlook vulnerable groups if biases go unchecked. You need safeguards to prevent harm and maintain fairness. If you want to understand how to navigate these complex issues effectively, there’s more to explore about ensuring ethical AI use in crises.

Key Takeaways

- Ensuring transparency and accountability in AI decision-making to prevent harm and clarify responsibility during disasters.

- Addressing algorithmic bias to promote equitable aid distribution and avoid neglecting vulnerable populations.

- Protecting personal privacy rights while collecting data essential for effective disaster response efforts.

- Balancing AI efficiency with ethical considerations, such as fairness, dignity, and avoiding misuse of technology.

- Implementing technical and ethical safeguards to prevent malfunctions, data breaches, and misuse during critical situations.

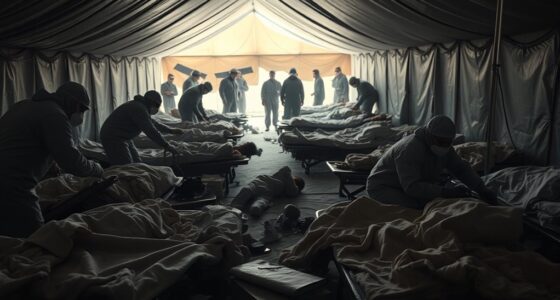

As artificial intelligence becomes increasingly integral to disaster response efforts, ethical questions inevitably emerge. You might wonder how deploying AI tools can impact individuals’ privacy or whether these systems are fair and unbiased. Privacy concerns become a major issue because AI often relies on vast amounts of personal data to identify at-risk populations, predict disaster impacts, or allocate resources efficiently. When collecting and analyzing such sensitive information, there’s a risk that data could be mishandled or misused, leading to breaches that threaten individuals’ safety and dignity. You need to contemplate whether data collection practices respect privacy rights and if proper safeguards are in place to prevent unauthorized access. Transparency about how data is gathered, stored, and used is essential to maintaining public trust in AI-driven disaster responses.

AI in disaster response raises privacy concerns and emphasizes the need for transparency and safeguards to protect individuals’ rights.

Simultaneously, algorithmic bias presents another significant ethical dilemma. AI systems are only as good as the data they’re trained on, which often contains historical biases or uneven representations. If the training data is skewed, the AI might prioritize certain communities over others or overlook vulnerable populations altogether. This could result in unequal distribution of aid, misidentification of needs, or overlooked risks in marginalized groups. When deploying AI in disaster scenarios, you must ask whether the algorithms are fair and inclusive, and if measures are taken to mitigate bias. Failing to address algorithmic bias not only reduces the effectiveness of disaster response but also risks exacerbating existing inequalities, potentially causing harm to those who need help most. Additionally, ensuring that these AI systems are designed with home furnishings safety standards can prevent technical malfunctions that could worsen emergency situations.

Furthermore, ethical concerns extend to accountability. When AI systems make decisions that impact lives—such as prioritizing evacuations or distributing resources—you need clarity on who’s responsible if something goes wrong. If an AI system inadvertently causes harm due to bias or privacy breaches, determining accountability can be complex. This raises questions about oversight, regulation, and the role of human judgment in decision-making processes. You should advocate for transparent protocols that define accountability and ensure human oversight remains integral to AI deployment.

In essence, as you leverage AI to save lives and manage crises, you must balance technological efficiency with ethical responsibility. Protecting privacy, combating algorithmic bias, and establishing clear accountability are crucial steps to ensure that AI enhances disaster response without infringing on individual rights or perpetuating injustices. Addressing these concerns proactively will help foster trust and ensure that AI serves everyone fairly and ethically during times of crisis.

Frequently Asked Questions

How Do AI Systems Prioritize Lives During Disasters?

You see AI systems prioritize lives during disasters by applying moral frameworks and weighing ethical trade-offs. They analyze data quickly to identify those most at risk, often based on factors like vulnerability or proximity. While aiming to save the most lives, they also face dilemmas, such as balancing individual needs against overall safety. This process involves complex ethical decisions, making transparency and human oversight vital to guarantee fair and responsible prioritization.

What Are the Privacy Concerns With AI Data Collection?

You should be aware that AI data collection raises privacy concerns, especially around data anonymization and consent management. If data isn’t properly anonymized, personal details could be exposed or misused. Additionally, without clear consent management, individuals might not agree to their data being used, risking privacy violations. Ensuring robust anonymization processes and transparent consent practices helps protect individuals’ privacy while enabling effective AI-driven disaster response efforts.

Who Is Accountable for AI Decision Errors?

Blame blurs when errors occur in AI decisions, but accountability always falls on the responsible party. You need clear liability assignment and robust ethical frameworks to determine who bears the burden when AI missteps happen. Developers, deployers, and overseers all share stakes, so establishing standards guarantees responsibility remains transparent. By setting strict guidelines, you can navigate the nuanced nature of AI errors, ensuring ethical accountability and preventing future pitfalls.

Can AI Algorithms Be Biased in Disaster Scenarios?

Yes, AI algorithms can be biased in disaster scenarios. You need to focus on algorithm fairness to guarantee equitable decision-making, especially when vulnerable populations are involved. Bias mitigation techniques help reduce unfair outcomes, making your AI systems more reliable and ethical. By actively addressing potential biases, you improve disaster response efforts and build trust among all affected communities, ultimately enhancing the effectiveness of AI-driven solutions in critical situations.

How Transparent Are AI Decision-Making Processes in Emergencies?

You might worry AI decision-making isn’t transparent in emergencies, but many systems now prioritize algorithmic fairness and stakeholder engagement. Developers work to make processes clearer, showing how decisions are made. While some opacity remains, ongoing efforts aim to improve transparency, ensuring responders and affected communities understand how AI tools operate. This fosters trust, allowing everyone to participate actively in disaster response, even when decisions must be made swiftly.

Conclusion

As you navigate AI-driven disaster response, remember that over 70% of responders worry about ethical challenges like bias and privacy. You must weigh the benefits of faster aid against potential harm or misuse of data. By staying vigilant and prioritizing transparency, you can help guarantee AI serves everyone fairly and responsibly. Embracing these ethical considerations isn’t just optional—it’s essential to build trust and save lives effectively during crises.